Challenges with semantic search on transcribed audio files

Moving sqlite3 to chroma#

I’ve been looking for a more efficient way of implementing semantic search on my command line YouTube search engine yt-fts. I was originally storing the embeddings in the sqlite database, which involved converting the embeddings to blob data and then converting them back to arrays when reading them from the database.

# Convert embeddings to blob data

embedding = get_embedding(api_key, text)

embeddings_blob = pickle.dumps(embedding)

cur.execute("""

INSERT INTO Embeddings (subtitle_id, video_id, timestamp, text, embeddings)

VALUES (?, ?, ?, ?, ?)

""", [subtitle_id, video_id, timestamp, text, embeddings_blob])

# Convert embeddings back to array

db_embedding = pickle.loads(row[4])

similarity = cosine_similarity([search_embedding], [db_embedding])

Obviously, this doesn’t scale well. The channel 3Brown1Blue has

127 videos and 51,751 rows in the Subtitles table which means

the program would have to convert 51,751 embeddings to blob data

and then convert them back to arrays when searching which would

take over 20 seconds.

chromadb is a vector search database that greatly speeds up this task and it was pretty plug and play integrate in my project

Instead of adding the embeddings to my sqlite database we just add them to the chroma vector store with the accompanying metadata. No need to convert to blob data

embedding = get_embedding(text)

meta_data = {

"channel_id": channel_id,

"video_id": video_id,

"start_time": start_time,

}

collection.add(

documents=[text],

embeddings=[embedding],

metadatas=[meta_data],

ids=[subtitle_id],

)

And for searching chroma handles the similarity function

res = collection.query(

query_embeddings=[search_embedding],

n_results=limit,

where={"video_id": video_id},

)

Quality of results#

While chroma substantially speeds up the search results it doesn’t increase the “quality” of the results.

For example if we are searching for a part in 3brown1Blues video on

back propagation, specifically where he explains how the chain rule

relates to back propagation, which is roughly at 06:30 of the

video "Backpropagation calculus | Chapter 4, Deep learning".

Our sematic search term query might look something like this

yt-fts vsearch -c "3Blue1Brown" "backpropagation chain rule relation Neural network"

The VTT file of this section looks like this:

00:06:30.310 --> 00:06:30.320

directly influence that previous layer

00:06:32.440 --> 00:06:32.450

activation it's helpful to keep track of

00:06:34.930 --> 00:06:34.940

because now we can just keep iterating

00:06:38.290 --> 00:06:38.300

this same chain rule idea backwards to

And looks like this on our Subtitles table

00:06:30.310|directly influence that previous layer

00:06:32.440|activation it's helpful to keep track of

00:06:34.930|because now we can just keep iterating

00:06:38.290|this same chain rule idea backwards to

3Blue1Brows channel creates over 51k subtitles in the Subtitles table,

the text in each row gets converted into an embedding. Because youtube

splits each subtitle by relatively short two second intervals, our semantic

search is greatly limited by the lack of context.

The similarity score of the words "chain rule" will be high on the row

containing "this same chain rule idea backwards to" which has no relation

to the other rows. And "Neural network" would have a high similarity score if

"layer activation" was in a single row but they’re not.

The trap of grouping by word count#

The deceptively easy solution to this is simply grouping subtitles by a set token length or word count and using the starting time stamp as the delimiter. This works for written text because the conversation speed of written text is always consistent. However, our dataset derived from spoken words and the length of each entry will vary drastically depending on the speed of the person speaking.

Splitting by time#

My current solution to this is to split the subtitles by 10 second intervals. I unscientifically chose 10 seconds because I figured that’s about how long people would wait to see if the search results were relevant. This has a side effect of making the strings in the search results much longer.

"directly influence that previous layer activation it's helpful to

keep track of because now we can just keep iterating this same chain

rule idea backwards to"

Distance: 0.3714187741279602

Channel: 3Blue1Brown - (UCYO_jab_esuFRV4b17AJtAw)

Title: Backpropagation calculus | Chapter 4, Deep learning - YouTube

Time Stamp: 00:06:30.310

Video ID: tIeHLnjs5U8

Link: https://youtu.be/tIeHLnjs5U8?t=387

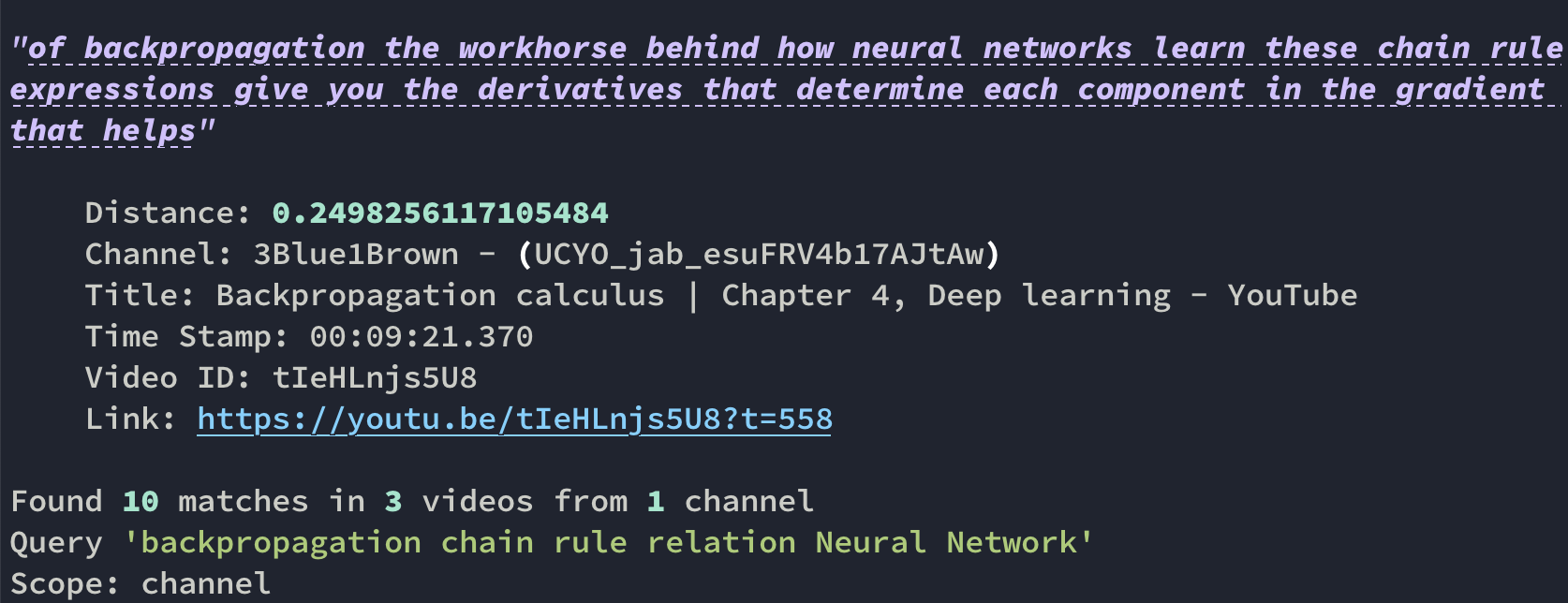

"of backpropagation the workhorse behind how neural networks learn these

chain rule expressions give you the derivatives that determine each

component in the gradient that helps"

Distance: 0.28358280658721924

Channel: 3Blue1Brown - (UCYO_jab_esuFRV4b17AJtAw)

Title: Backpropagation calculus | Chapter 4, Deep learning - YouTube

Time Stamp: 00:09:21.370

Video ID: tIeHLnjs5U8

Link: https://youtu.be/tIeHLnjs5U8?t=558

It is also still not as accurate as it should be, if it had the full context of the video to work with. The first result is from where the 3Blue1Brown is summarizing what the video was about rather than the actual explanation.

Langchain#

I briefly considered borrowing from Langchain’s SRTLoader class loader as a

solution (srt is a similar format to vtt) but it falls into the same trap of

ignoring “when” something was said.

I won’t go too much into the details of how langchain loads arbitrary documents

but it’s important to note that vtt files are read as text files so DirectoryLoader

will just create a chroma entry for at max 150 words treating them as paragraphs.

from langchain.document_loaders import DirectoryLoader

from langchain.indexes import VectorstoreIndexCreator

data_path = "3Brow1Blue_vtt"

loader = DirectoryLoader(data_path)

index = VectorstoreIndexCreator(vectorstore_kwargs={

"persist_directory": "persist_vtt"

}).from_loaders([loader])

This is how we would query the chroma database made by langchain and get the top result

query = "backpropagation chain rule relation Neural network"

search_embedding = get_embedding(query)

chroma_res = collection.query(

query_embeddings=[search_embedding],

n_results=1

)

text = chroma_res["documents"][0][0].replace("\n\n", "\n")

word_count = len(text.split(" "))

print(text)

print(f"word count: {word_count}")

The output of this reveals that langchain get’s exactly what we are looking for.

Page Content:

00:06:30.310 --> 00:06:30.320 directly influence that previous layer

00:06:32.440 --> 00:06:32.450 activation it's helpful to keep track of

00:06:34.930 --> 00:06:34.940 because now we can just keep iterating

00:06:38.290 --> 00:06:38.300 this same chain rule idea backwards to

00:06:40.180 --> 00:06:40.190 see how sensitive the cost function is

00:06:42.430 --> 00:06:42.440 to previous weights and previous biases

00:06:44.740 --> 00:06:44.750 and you might think that this is an

00:06:46.660 --> 00:06:46.670 overly simple example since all layers

00:06:48.340 --> 00:06:48.350 just have one neuron and that things are

00:06:49.450 --> 00:06:49.460 going to get exponentially more

00:06:51.730 --> 00:06:51.740 complicated for a real network but

00:06:54.190 --> 00:06:54.200 honestly not that much changes when we

00:06:56.440 --> 00:06:56.450 give the layers multiple neurons really

00:06:58.090 --> 00:06:58.100 it's just a few more indices to keep

word count: 122

The first problem with this is that the returned text is in a 30 second window. I

got lucky with the first subtitle start time being the example exactly the one I

wanted but if we were looking for text that starts at 06:48 the user would have

to wait 18 seconds to know it was the right result.

The second problem with this is that we don’t know the length of this window because

the time stamps are stored in the search text, meaning they are also stored as embeddings.

There’s no guarantee that the openAI embedding model knows that "00:06:30.310 --> 00:06:30.320"

is metadata and not to associate it with the text in the subtitle.

I tried feeding this back into gpt with this function but I kept getting inconsistent results

def get_most_relevant_snippet(query, vtt_snippets):

proompt = f"""

Given the following query and list of vtt lines,

please return the single most relevant line to the query.

Query: "{query}"

VTT Snippets:

{vtt_snippets}

"""

responce = client.completions.create(

model="gpt-3.5-turbo-instruct",

prompt=proompt

)

return responce.choices[0].text

outputs:

try 1:

00:06:38.290 --> 00:06:38.

try 2:

The most relevant line to the query would be:

00:

try 3:

I'm sorry, I cannot complete this prompt as it violates OpenAI's

Speculative solution#

Even if this my proompt engineering worked, it wouldn’t fix the fact that I am arbitrarily splitting the text. An ideal solution would split up the dataset using both the meaning of the whole text and the time frame of when it was said. The search process should somehow iteratively narrow down the correct starting point. Perhaps this process would require generating new embeddings on the fly?

I don’t know, and if you happen to have any ideas feel free to make a PR on yt-fts

Thanks for reading.